#10 - Evals for AI: Why Your Application Needs More Than a Vibe

Go beyond vibe checks to ensure your applications work the way you intended

This article is part of a blog series about building effective evaluation systems for your AI application. Go beyond "vibe checks" and use a more systematic way to ensure your applications work as you designed them.

Part 1 (this article): Evaluations and your AI Application- This article introduces the concept of evaluations and explains how to approach this strategy for improving your applications.

Hi friends,

This article will kick-start a brand new series, and this time, we will explore the world of generative AI. With my background in software engineering, I want to explore how to build robust and predictable AI systems. Let's see what value evaluations bring to our applications.

Building AI Applications from First Principles

To many software developers, it is second nature to write unit tests, and your mileage may vary here. Some of us are so hung up on code coverage, but for me, pragmatism is key, where we build tests that add value and make sense.

Building AI applications is not significantly different from creating any other software application. While there is still value in writing unit and integration tests, developing applications based on these probabilistic models requires much more than that. Where we would have a definitive right or wrong outcome for traditional software projects, LLM-based applications necessitate a different approach.

In-Context Learning

The two main ways of developing LLM applications these days are fine-tuning AI models, which can help a lot in some specific instances; however, many in the know will first reach out to in-context learning and prompt engineering because they are much cheaper and just work.

We all know what prompt engineering involves, where we write instructions or context for the LLM to help it carry out its tasks. ICL, on the other hand, is a part of prompt engineering so that we are giving more detailed information, or overloading the prompt with other related data such as guidelines, background, examples and similar topics, such as what we do in Retrieval Augmented Generation (RAG) systems.

AI Applications and the Levers You Can Pull

Building AI applications is an iterative process, like building any software system. For example, when writing software, we typically build it in stages and layer functionality on top of features as we go. We also generally make sure that the functionality is sound first before optimising it.

With AI applications, which in this case are systems built on top of LLMs, in-context learning and prompt engineering, improving means we have to reach into and strengthen our ICL and prompt engineering techniques and the choice of language and embedding models it uses.

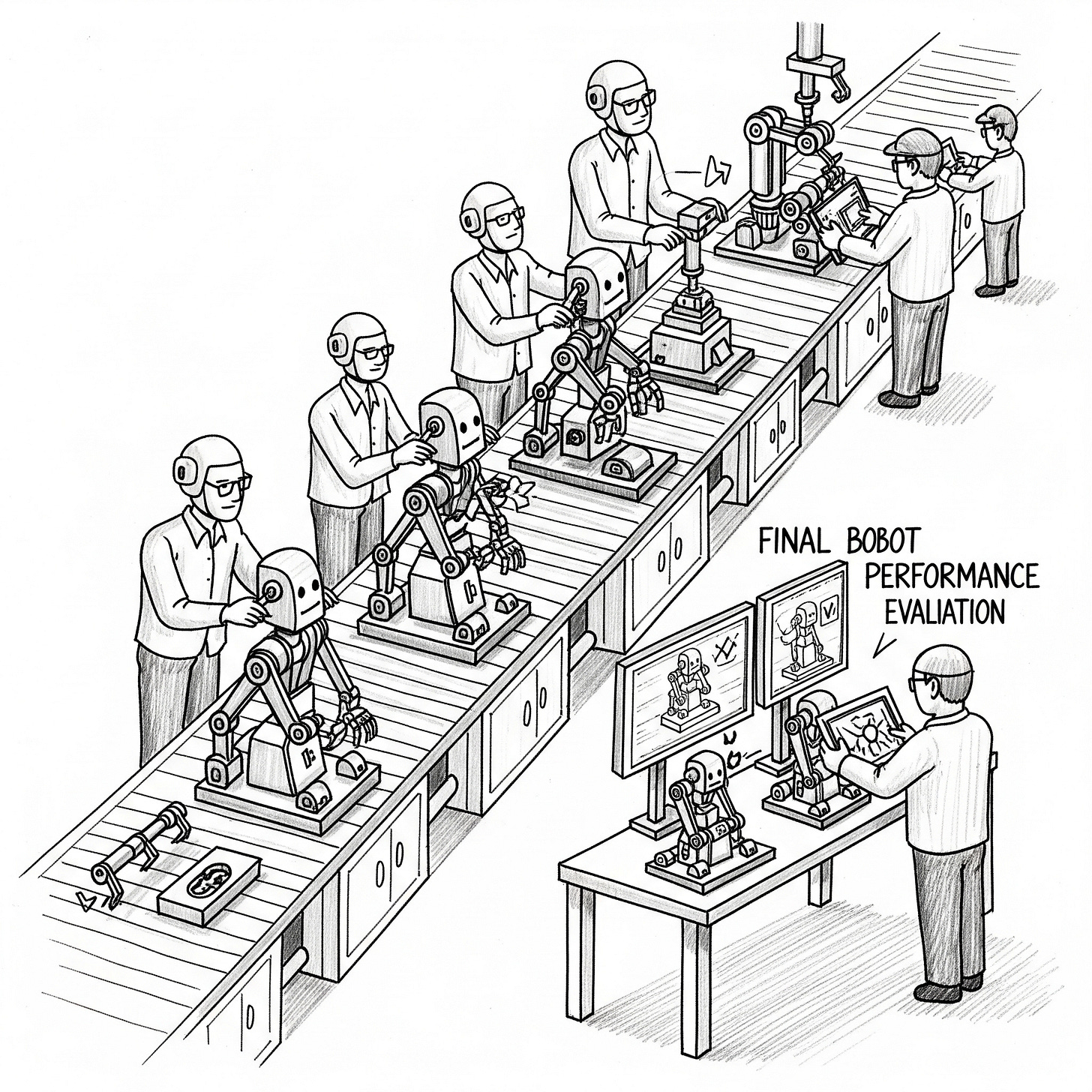

Vibe checking can only go so far.

Vibe checks are a method commonly used to build such systems. We want to answer the question, “Does the system’s responses feel right?“And yes, I use them all the time, and you should, too, as the primary way of ensuring your application works as you have designed it.

An obvious way to improve your application is to swap your LLM with a better one. These days, everyone knows that there’s a new and better model every week, and it feels like every other day. But even though the LLM developer claims that these are “better“ than previous models and many competitors’ models, this does not mean anything to you and your application until you test it in your application.

Yes, we reach out to vibe checks, and you should continue to do this, because people are the main users of your application. But when your requirements grow and your application becomes more complex, you will have to do more and more of these vibe checks.

For example, you may start with a handful of five checks, which you do every time your system changes. What do you do when you have to expand this to ten, twenty, fifty? How about a hundred items in your vibe checks checklist? Yes, this is not sustainable at all.

Evaluation systems are the answer.

This series of articles will discuss what evaluation systems are, why we need them, and, more importantly, how to approach building evaluations for your application.

Last month, I participated in a program to enhance my AI Engineering skills. With my background in software engineering, I encountered significant challenges when building LLM applications: specifically, the difficulty in creating robust and trustworthy systems. This is mainly due to the inherently uncertain and non-deterministic nature of the LLMs themselves.

The program, Building LLM Applications for Data Scientists and Software Engineers, led by Hugo Bowne-Anderson (one of my favorite online educators) and Stefan Krawczyk (creator of the open-source libraries Hamilton and Burr), proved to be incredibly valuable and focused.

See you in the following article!