#9 - Putting Our Bird Embedding Model to Work: Introducing the Web Frontend

To make our embedding model accessible and useful to a wider audience, we developed a modern front-end interface for similarity inference.

This article is part of a blog series about demistifying vector embedding models for use in image search use case:

Part 1. So how do you build a vector embedding model? - Introduces vector embedding models and the intuition behind the technologies we can use to build one ourselves.

Part 2. Let's build our image embedding model - Shows a couple of ways to build embedding models - first by using a pre-trained model, and next by fine-tuning a pre-trained model. We use PyTorch to build our feature extractor.

Part 3. Modelling with Metaflow and MLFlow - Here we are using Metaflow to build our model training workflow, where we introduce the concept of checkpointing, and MLFlow for experiment tracking.

Part 4. From Training to Deployment: A Simple Approach to Serving Embedding Models -Packaging your ML model in a Docker container opens it up to a multitude of model serving options.

Part 5. Putting Our Bird Embedding Model to Work: Introducing the Web Frontend - (this article) -For our embedding model to prove useful to others, we have created a modern frontend to serve the similarity inference to our users.

Hi friends,

In this final installment (hopefully!) of our project, we've reached the critical point where theory meets application. Only when our app users can directly interact with our embedding-model-powered similarity system will they fully appreciate its value.

To recap, in our first article, we introduced embedding models and then explored how to build them using PyTorch. We subsequently utilised tools like Metaflow and MLflow to streamline our MLOps process. Following that, we discussed how to containerise the model for efficient serving.

However, the true impact of these steps becomes evident only through visual, side-by-side comparisons. The challenge of replicating human-like visual comparison, identifying similarities and differences between bird images, is addressed through machine learning, as demonstrated in our previous articles.

Therefore, this article will shift its focus from technical details to a practical demonstration of the front-end interface, showcasing the embedding model's capabilities through bird image similarity searches.

The Bird Similarity Search System: An Overview

Before proceeding, let me visually summarise what we have accomplished. The lower left box illustrates the process we undertook to build the bird embedding model using PyTorch, Metaflow, and MLflow.

Following the creation of the embedding model, as shown in the lower right box, we utilised FastAPI and Docker to prepare our container for model serving. To enable image semantic search functionality, we selected LanceDB as our embedded vector database, facilitating rapid similarity searches.

Finally, the upper right box depicts the deployment-ready front-end, developed with ReactJS (Vite) and Docker, designed to showcase the system's capabilities to our users.

Search 1 - The Philippine Eagle

I was born in Cebu, an island in the middle of the Philippines, and even though I now call Australia home, I am still a proud Filipino. So the only bird that should go first is the Philippine Eagle.

Appearance: This bird has a very distinctive, almost comical appearance. It has a prominent, spiky crest of feathers on its head. Its face is pale with dark markings around the eyes. The beak is relatively short and thick. The body has a a mix of brown and white feathers.

The application displays the top 8 matches, it could have returned more, however, we wanted to display the results nice and even on the screen. After uploading the reference image, it takes a split-second for the results to show.

Notice that it was able to identify with 87.94% certatinty that the reference image is a Philippine Eagle, and the next most similar bird in the database is a low 38.73%. There will be a little bit of a delay if we deploy this to the cloud, however, this is much better in latancy and capability that any generic LLM out there. Just to show that Generative AI, as great as it is, is never the answer to all use cases out there.

For example, I showed this image to both Gemini and ChatGPT, with mixed results. While Gemini was able to identify the bird and suggest other similar birds, and although it also took less than a second, it was only able to give me text descriptions, I know its unfair to Gemini, as its a language model after all. But it’s multi-modal, so it should have been able to show me pictures too.

ChatGPT did not do too well either, though it did try to generate images of similar birds, it got stuck and produced an error generating the images after trying for a minute.

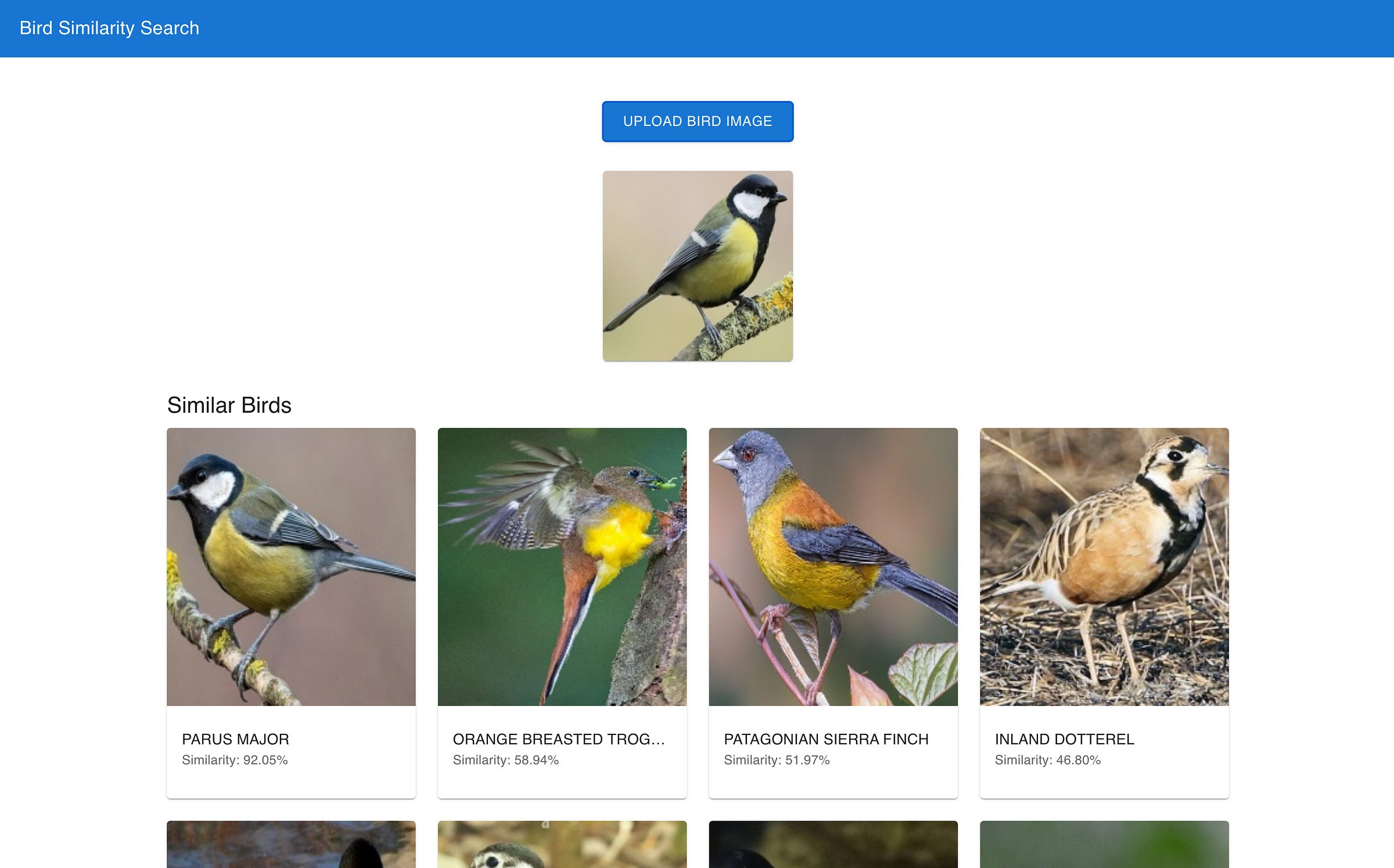

Search 2 - Parus Major

Appearance: This is a Great Tit (Parus major). It has a black cap, white cheeks, a yellow breast with a black stripe down the center, a greyish back, and a dark beak. It's a small passerine bird.

Despite our vector database containing only 50 bird images, it effectively demonstrates the similarity search process. By storing the bird images' vector embeddings in LanceDB, we can perform a similarity search by inputting a bird image.

Upon upload via FastAPI, the image is converted into a vector embedding, which is then submitted as a query to LanceDB. Due to the absence of a specified index, an exact search is performed exhaustively (brute-force) within a fraction of a second.

For databases containing millions of images, indexing is necessary to enable efficient nearest-neighbour searches, which scale to larger datasets but may result in a lower recall.

Search 3 - Java Sparrow

Appearance: This is a Java Sparrow (Lonchura oryzivora). It has a distinctive pink beak, a grey head, a pinkish cheek patch, a grey body, and a black tail. It's a small finch-like bird.

Observing both the Java Sparrow and the previously described Great Tit (Parus major), one might notice superficial similarities, such as the white cheek patches and grayish body coloration. However, these are where the similarities end.

It is noteworthy that the application accurately reflects this distinction. While it identifies both birds as among the most similar, the similarity scores clearly indicate a significant difference. The Java Sparrow is identified with a 96.98% similarity, whereas the Great Tit registers only 47.51%.

This effectively demonstrates that, despite some shared visual features, the application correctly recognises the Great Tit as distinctly different from the reference Java Sparrow.

Search 4 - Scarlet Tanager

Appearance: This is a Scarlet Tanager (Piranga olivacea). It has a bright red body, black wings and tail, and a thick, pale beak. It's a small songbird.

As with search #3, the application successfully identified and differentiated the Bornean Bristlehead as the most similar bird in the database. Beyond the shared red and black coloration, these two species exhibit significant differences.

The Scarlet Tanager is a small bird endemic to North and South America, while the Bornean Bristlehead is a medium-sized bird native to Borneo. Fortunately, neither species is endangered, and both are widespread within their respective habitats.

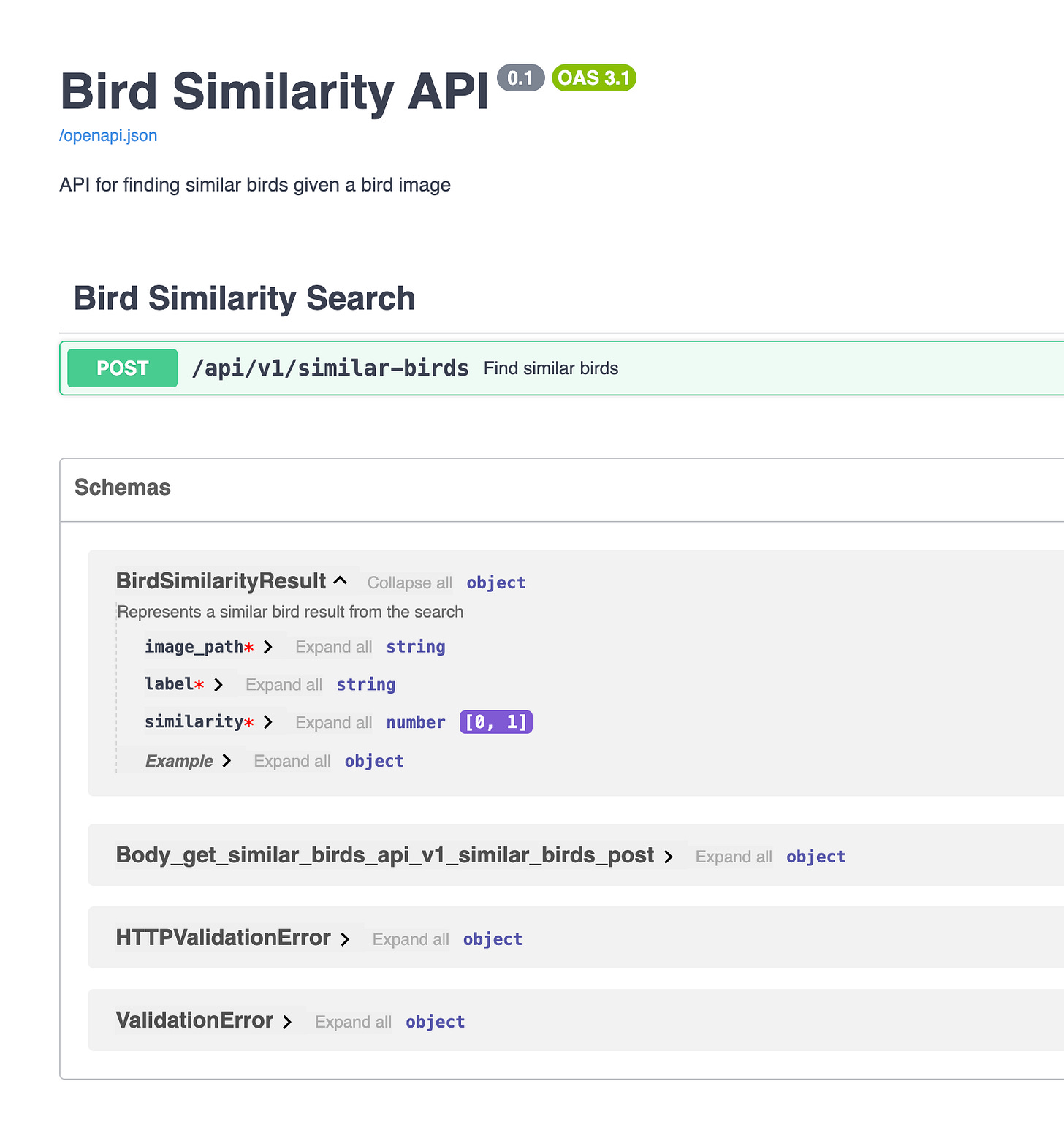

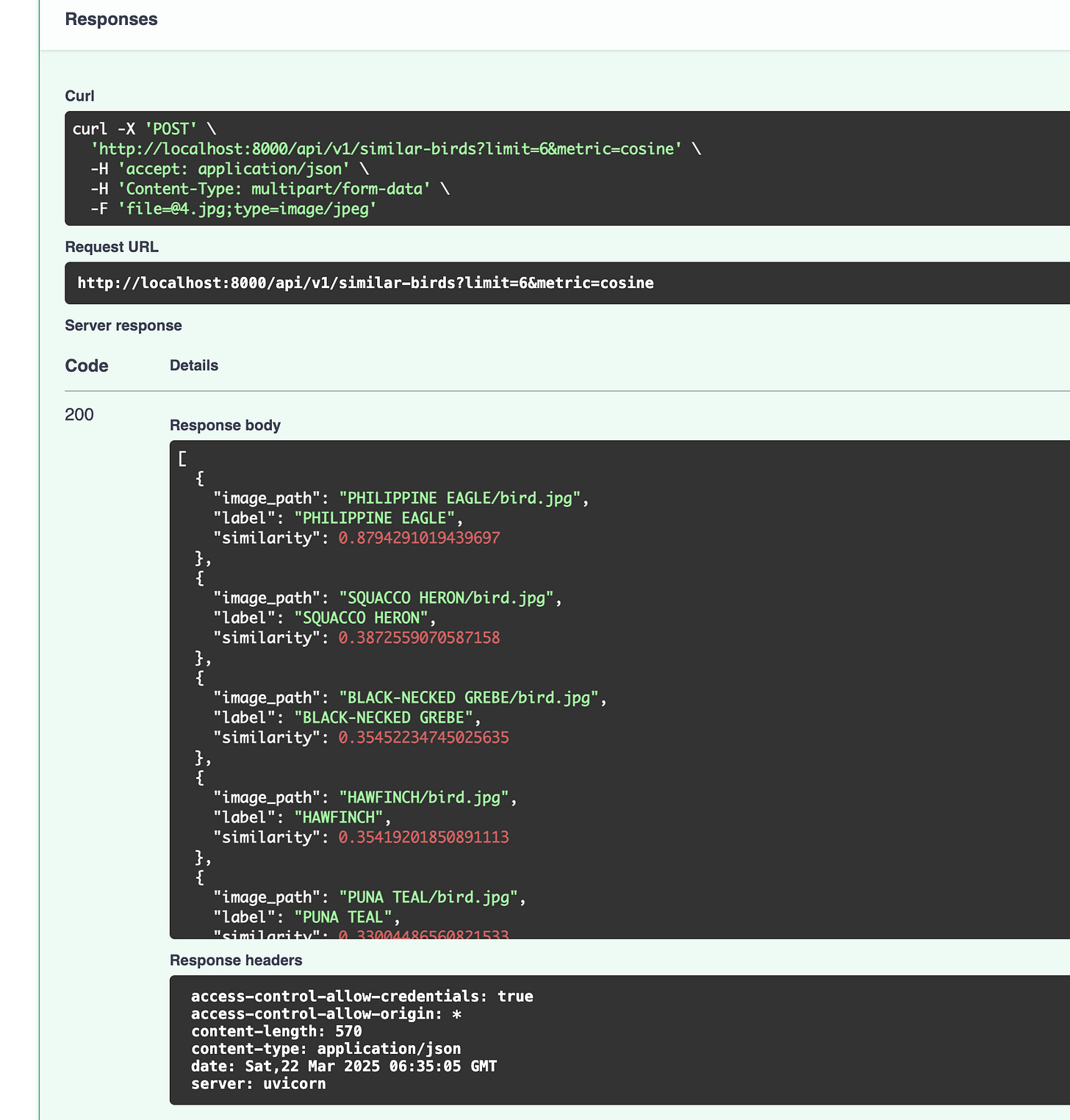

API Implementation for the Front End

As detailed in the previous article, we employed FastAPI to serve our similarity search system, which comprises the embedding model and LanceDB, our vector database. A notable feature of FastAPI is its built-in support for generating OpenAPI (formerly Swagger) documentation, as illustrated below. You have likely encountered this type of API documentation previously.

The OpenAPI interface provides an interactive environment for testing the API. Users can upload bird images directly through the documentation to experience the system's functionality firsthand. Please try it!

Local System Execution

Once the fine-tuned embedding model and the bird embeddings are ingested into LanceDB, executing the complete ReactJS front-end and FastAPI back-end locally is achieved through the following command:

docker compose up --buildThis command initiates the build and execution of the Docker Compose network, deploying both the front-end and back-end services, thereby providing the complete system as detailed in this article.

Parting Words

Building this application has been a rewarding experience, initially intended as an educational endeavor for you, my readers and friends. However, as I've often found, these projects invariably benefit myself as well.

On numerous occasions, I've revisited my past articles, years after publication, and been surprised by the refreshed understanding I gain. It's a familiar feeling of learning and comprehension, distilled from words and images within a simple blog post.

I hope this project has sparked your interest in the possibilities of how computers can interpret images. It would be wonderful if you felt encouraged to explore these concepts further and perhaps even apply them in your own projects.

My aim was to illustrate the potential of machine learning for visual analysis and similarity search, and I hope this has motivated you to continue learning and experimenting in this exciting field.

Why don’t you give it a go!

You can find all the code for this project on GitHub. Feel free to check it out and try it for yourself – it's all open source!

If you have any questions, drop a comment here on Substack, or send me a DM on LinkedIn.

And hey, if you're interested in collaborating on something cool, let's chat! I'm always up for new ideas and working with other passionate people.