#6 - Modelling with Metaflow and MLFlow

After building a model in a Jupyter Notebook, we port our code to be production-ready and we use Metaflow for orchestration and MLFlow for experiment tracking

This article is part of a blog series about demistifying vector embedding models for use in image embeddings:

Part 1. So how do you build a vector embedding model? - Introduces vector embedding models and the intuition behind the technologies we can use to build one ourselves.

Part 2. Let's build our image embedding model - Shows a couple of ways to build embedding models - first by using a pre-trained model, and next by fine-tuning a pre-trained model. We use PyTorch to build our feature extractor.

Part 3. Modelling with Metaflow and MLFlow - (this article) - Here we are using Metaflow to build our model training workflow, where we introduce the concept of checkpointing, and MLFlow for experiment tracking.

Part 4. From Training to Deployment: A Simple Approach to Serving Embedding Models -Packaging your ML model in a Docker container opens it up to a multitude of model serving options.

Part 5. Putting Our Bird Embedding Model to Work: Introducing the Web Frontend -For our embedding model to prove useful to others, we have created a modern frontend to serve the similarity inference to our users.

Hi friends,

In the previous article, we explored two approaches to creating an image embedding model, demonstrated through Jupyter notebooks: one for using a pre-trained model and another for fine-tuning that pre-trained model. These examples showed how to build an embedding model both by leveraging an existing pre-trained model and by fine-tuning it for our specific use case.

Image: We built an embedding model able to distinguish between bird species.

These are a good introduction, however this is still very far from having the ability to build machine learning models for real. Models that can be easily trained and prepared for online inference on the cloud, or in other words get them as close to production-ready as possible.

Introducing Metaflow

In this article, we’ll be introducing Metaflow, a fantastic machine learning orchestrator open-sourced in 2019 after being developed and battle-tested in Netflix to run their internal ML, AI and Data projects. I don’t want to have to repeat myself introducing Metaflow, their excellent documentation already does this better than I ever can.

According to their website: “Metaflow is a human-friendly Python library that makes it straightforward to develop, deploy, and operate various kinds of data-intensive applications, in particular those involving data science, ML, and AI.“

I have been using Metaflow for a while now, here’s a past project that used it as the ML orchestrator. This small project allowed me to understand first-hand the power of this tool.

Image: The stacks in Metaflow.

Metaflow super powers

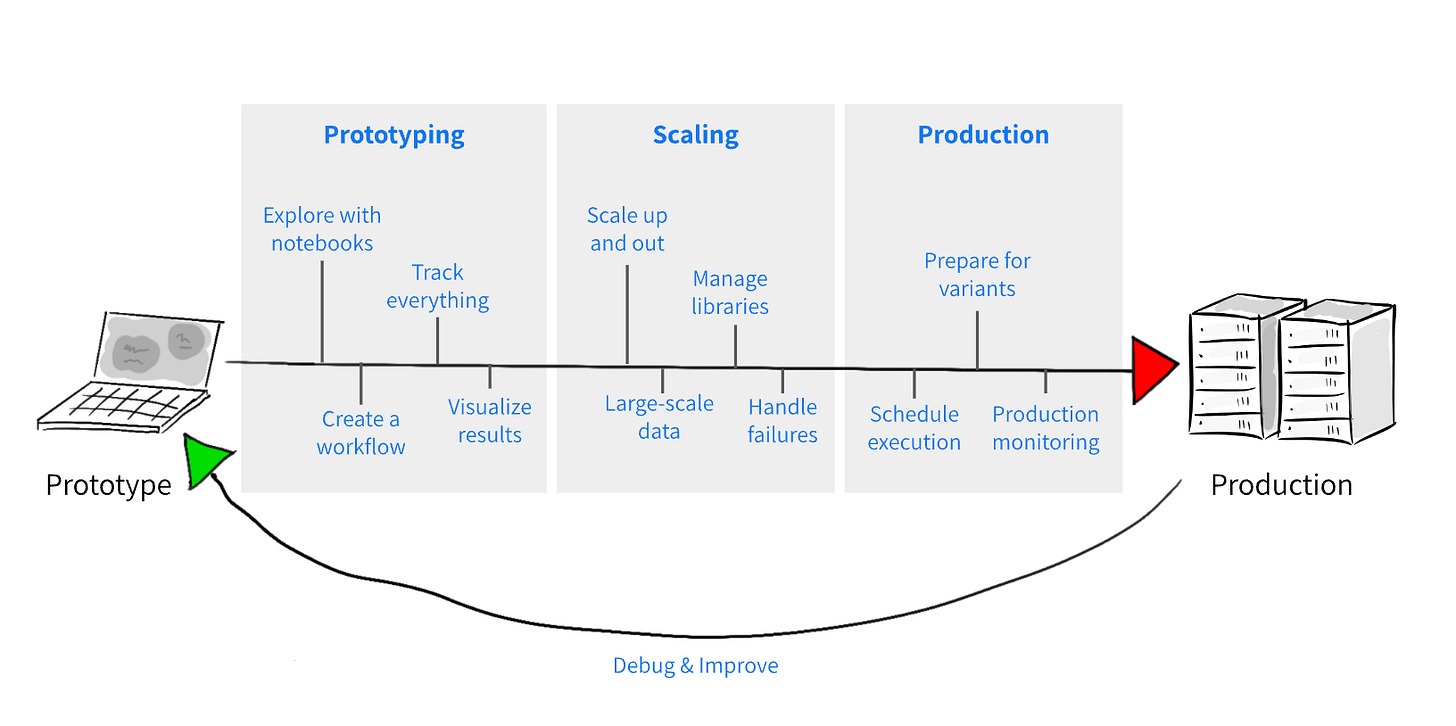

The real strength of this tool lies in its ability to streamline the transition from early prototyping to production. You can start by developing your solution locally on a laptop, ensuring an intuitive and straightforward experience. Then, with minimal code changes, you can scale your code to leverage your preferred cloud platform—whether AWS, Google Cloud, or Azure—for compute and storage.

Image: Metaflow enables a simpler process from developing locally to full production system.

Here’s the Metaflow pipeline I created for training our bird species embedding model (Github link).

This is truly ingenious because it empowers data scientists to productionise their models independently. Traditionally, this responsibility often fell to Machine Learning Engineers (MLEs), who had to port and adapt the models for production. While effective, this approach introduces an additional layer of complexity, which can disrupt the process.

Data scientists, who understand their models best, should be the ones bringing their creations to life. This tool eliminates that barrier, enabling them to do so with ease. Ultimately, it allows you to achieve more with fewer resources.

Since the day it’s been open sourced, more and more materials have been made available for us, such as these great tutorial resources. They have made it even easier by providing Metaflow in a sandbox environment, so that you don’t even have to set it up in your cloud account.

Using Metaflow to build our model

So to continue the the article and the bird classification use case, I’ve built an MLOps pipeline that is almost ready for production. I say almost, because I’m not really deploying this to any cloud environment, although there will be a minimal effort to do so. Here is the python code for this flow.

With Python, Metaflow and MLFlow, we have built an ML System that:

have the ability to build Python-based Directed Acyclic Graphs (DAGs)

have version control as a native feature

allow you to easily scale up your pipeline from prototype to production

enables you to have an ML system that is library agnostic, so that you can bring in your favourite ML library, like scikit learn, PyTorch or TensorFlow.

allows you to easily debug your pipelines, as well as create a more fault-tolerant system

With MLFlow, we have also added the ability to log and track experiments, to ensure a system that is dependable and reproducible. This includes a model registry functionality that makes tracking your models with ease.

Image: MLFlow metrics page showing this training run’s metrics

Model Checkpoints

One of the key features I’ve added to this workflow is support for model checkpoints. Before diving deeper, let’s clarify what checkpoints are. As described in an article on Towards Data Science, a checkpoint is a snapshot of a model’s entire internal state at a given point during training. At the end of training, this state is saved into a binary file, which can later be loaded to enable predictions.

However, best practices suggest going beyond saving the model only at the end of training. It’s far more effective to periodically save checkpoints at critical stages of the training cycle.

Image: Periodic checkpointing (image by Lak Lakshmanan)

Most people think of checkpointing simply as a way to save a trained model for later inference, and while this is important, the benefits of checkpointing extend much further. One of its most significant advantages is enabling a fault-tolerant training system—a feature I cannot stress enough.

Imagine you’re running a 10-hour training cycle, and disaster strikes—be it hardware failure, a software crash, or some other catastrophic issue—9 hours in. Without checkpoints, you’d need to start training from scratch, wasting time and resources on another 10-hour cycle. However, with periodic checkpoints, you can simply resume training from the most recent saved state. For instance, if you save your model every hour, you’d only need to retrain from hour 9, saving precious time and effort.

That said, it’s important to strike a balance in how frequently you save checkpoints. Saving too often can waste storage and slow down training, while saving too infrequently may not provide enough recovery points to be useful. Thoughtful checkpointing ensures you maximise efficiency and minimise risk.

Conclusion

Here’s the Metaflow pipeline I created for training our bird species embedding model (Github link).

As discussed in previous articles, we approached this by initially treating the task as a classification problem. If you examine the code, you'll notice it follows a standard PyTorch model training process. However, the key step is removing the classification head before saving the final checkpoint. This transforms the model from a classifier into an embedding model capable of generating a 2048-dimensional embedding vector for each bird image.

We ported our model training notebooks into a production-ready workflow using Metaflow DAGs. Metaflow already works for local development, however for MLFlow, there was a little bit of configuration to make it work in a local Docker setup.

This wraps up our process for building embedding models. It was an excellent opportunity to improve our understanding of deep learning for image processing. While ResNet and similar architectures are considered "old tech" (dating back to 2015), they remain foundational and widely used in industries like manufacturing and medical diagnostics.

Till then,

JO